Pluribus Networks - Whitebox Fabric #NFD16

On day two of Networking Field Day, Pluribus Networks gave us a rundown on what is possible with their Netvisor OS, whitebox hardware, and a distributed architecture. I was impressed with the flexibility of the solution, but like any design choice, there are some limitations to be aware of.

As a relative newcomer to the Pluribus world, I wanted to know what Pluribus was made of. The answer?

- Whitebox (or Pluribus branded) switch hardware

- Layer 3 connectivity between switches

- Pluribus Netvisor Operating System

Hardware

Most switch hardware is now pretty generic, so Pluribus gives you the choice to run Dell, D-Link, Edge-Core or Pluribus hardware, which means the magic is really in the software. I'm not going to spend time going over hardware options, because with those choices, you should be able to easily find an option that fits your price/performance needs - that's the beauty of whitebox.

Layer 3

Next on the list, why L3 connectivity? What about my precious L2 network? Well, the Netvisor OS uses VXLAN for L2 connectivity, which gives you some very interesting design flexibility. For example, you can run Cisco or Arista in your core/spine, and just use Pluribus at the edge/leaf nodes (where L2 actually matters). Your core provides L3 connectivity, and your Pluribus edge will route all our L2 traffic as needed over L3 via VXLAN. Why would you do this? How about lowering upgrade costs by leaving your core as-is. Or run two different fabrics side by side. Maybe span your L2 edge across datacenters? Well...let's not get too crazy...

Pluribus will advertise Netvisor OS as a DCI solution, but like I mentioned before, every design has a trade-off, and generally L2 DCI has a steep price - Pluribus is no different. It is important to ask questions like "What happens to both sides of the fabric if the DCI goes down?". The good part? The Netvisor fabric will continue to run as-is in split brain mode. The bad? You will be locked from making any configuration changes on any side until the DCI is restored. Personally, I would run two separate fabrics that are not dependent on the DCI.

Netvisor Operating System

Now, the most interesting part of the Pluribus solution - Netvisor OS. There are so many different features and components, but I'm only going to focus on two areas that grabbed my attention during the presentation:

- Management Capabilities

- Network Virtualization

Management Capabilities

Immediately I noticed that there are no controllers running the Netvisor OS fabric. Each switch is running the Netvisor OS application, with full-mesh connectivity to all other switches in the fabric. You can log into the CLI or Web or API from any switch and manage the entire fabric! Besides fabric wide configuration, you can also revert changes with fabric wide rollback. While controller-less has it's benefits, there are some scaling limitations to be aware of that other "controller-required" solutions do not encounter. Right now, a Pluribus network is limited to 40 switches in a single fabric. Considering two top-of-rack switches should easily be able to support 1000 VM's in any modern design (in half a rack), this shouldn't be a concern for many, but it's good to be aware.

Pluribus has also built out integrations with other management platforms, like VMware and Nutanix. The VMware integration works closely with all the major VMware products - ESXi, VSAN, and NSX. The Netvisor OS switch fabric will automatically build networks and VXLAN tunnels to support new ESXi hosts coming online and on-demand vMotions to ensure full VM mobility across the fabric. VSAN cluster multicast configurations are completely automated on the network side. NSX is particularly interesting, as Netvisor OS doesn't just provide L3 connectivity for NSX networks, it also provides visibility into the traffic inside the NSX VXLAN tunnels.

On top of management flexibility and third-party integrations, Pluribus has also built out a analytics/telemetry platform that allows deep visibility into all your network traffic. In the future, I'd like to see Pluribus provide a tight integration with Kubernetes for container networking.

Network Virtualization

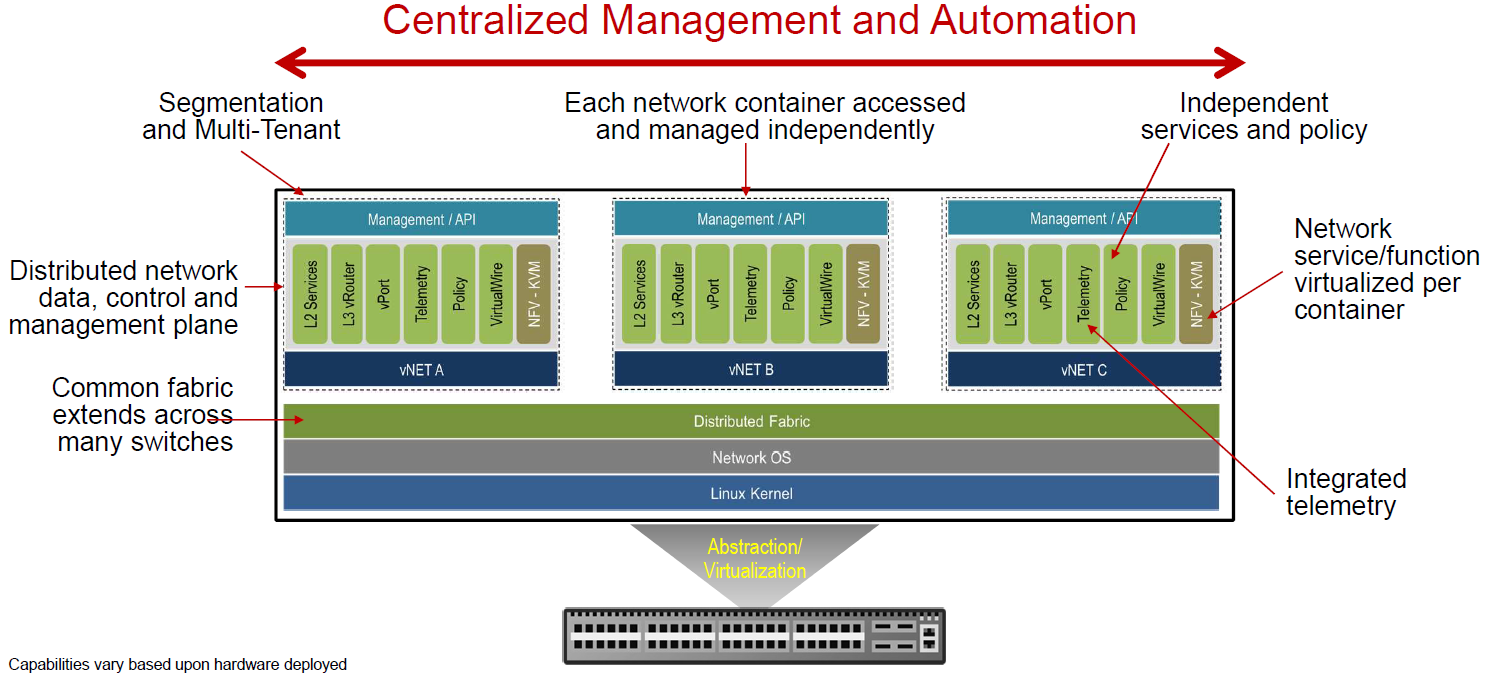

100% Network/Tenant Virtualization. Not everyone needs this feature, but if you do, this is incredibly slick. Pluribus has virtualized the entire network stack for us, just like server hypervisors have done for the systems guys. When you create a new "tenant" in Netvisor OS, the tenant will have their own management portal, full set of VLANs, routing protocols, and policies - all running on the same underlying hardware. Tenant "A" can log into the CLI and make changes completely independent of Tenant "B". This concept may not be completely new if you are used to working with VDCs on Cisco Nexus 7ks, but if you are used to working on Nexus 9ks (which is what Cisco is pushing for all new datacenter deployments - especially if you are using VXLAN), then this feature will be new to you.

Even if you're not a multi-tenant datacenter provider, virtualizing your network infrastructure can be used for a number of scenarios:

- Separate Dev, QA and Prod environments

- Separate External, DMZ and Internal networks

- PCI network segments

- Guest and Contractor networks

- Automation testing

This is just another way for the network to provide value by reducing network costs for separate environments, and decrease risk by limiting changes to each unique environment.

For new datacenter builds, or even more mild datacenter refreshes, Pluribus has the capability to fit in with a solid set of features and integrations. Giving network operators the choice to bring our own hardware means we're not limited by the vendor's hardware pricing or design. And while Pluribus is far from the only company providing a network operating system on whitebox hardware, their network management and virtualization stack are differentiating features that deserve a look.

Disclosure: I attended this presentation as part of Networking Field Day, a Tech Field Day event. All expenses related to the event were sponsored, in addition to any free swag given out by vendors. All opinions expressed on The Network Stack are my own and not those of Tech Field Day, vendors or my employer.