AWS Transit Gateway

At re:Invent 2018, AWS announced the Transit Gateway, finally giving us a native solution to provide scalable transit connectivity. After attending sessions and deploying Transit Gateway, I wanted to dive into the solution and see what is possible.

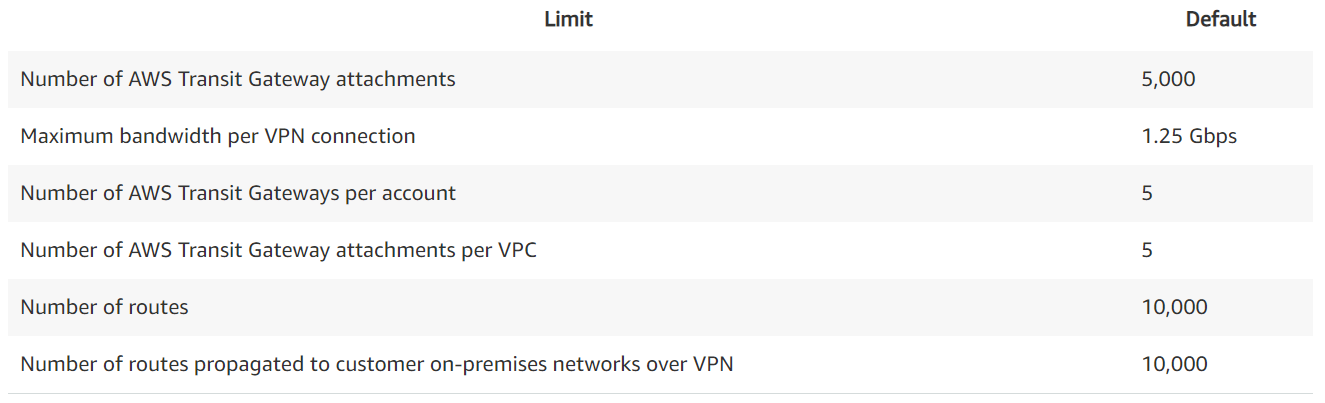

Tech Specs

In case you haven't read the official docs, the AWS Transit Gateway is a regional layer3 router connecting VPC, VPN, Direct Connect (soon) across multiple accounts, with support for multiple route tables (VRFs). The Transit Gateway (TGW) has much higher scalability limits than VPC peering.

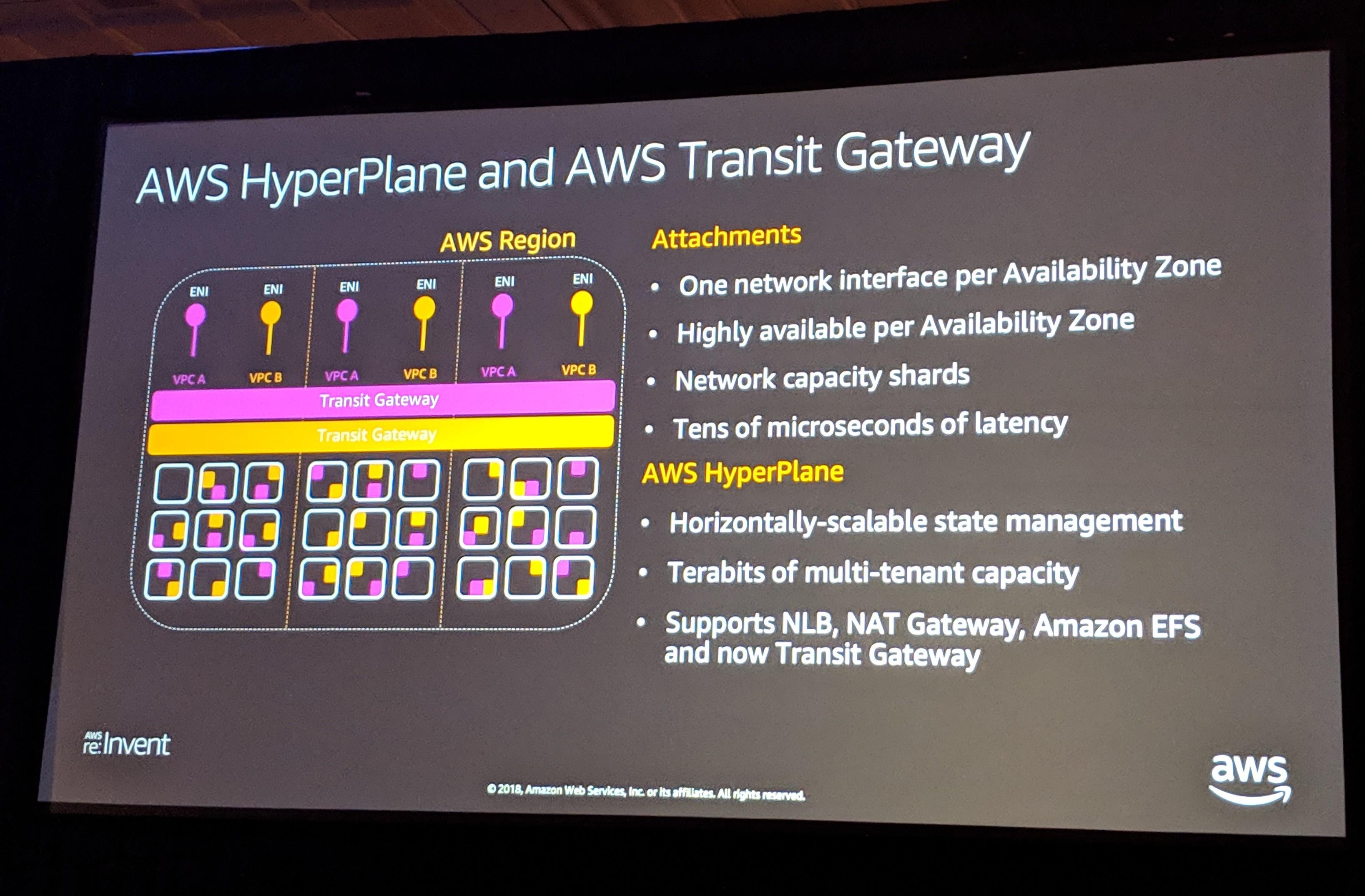

How did Amazon put a big router in the cloud?

The Transit Gateway is part of the AWS Hyperplane architecture, an internal AWS service that provides terabits of capacity. This is tested and proven tech, already powering NLB, NAT Gateway, and EFS:

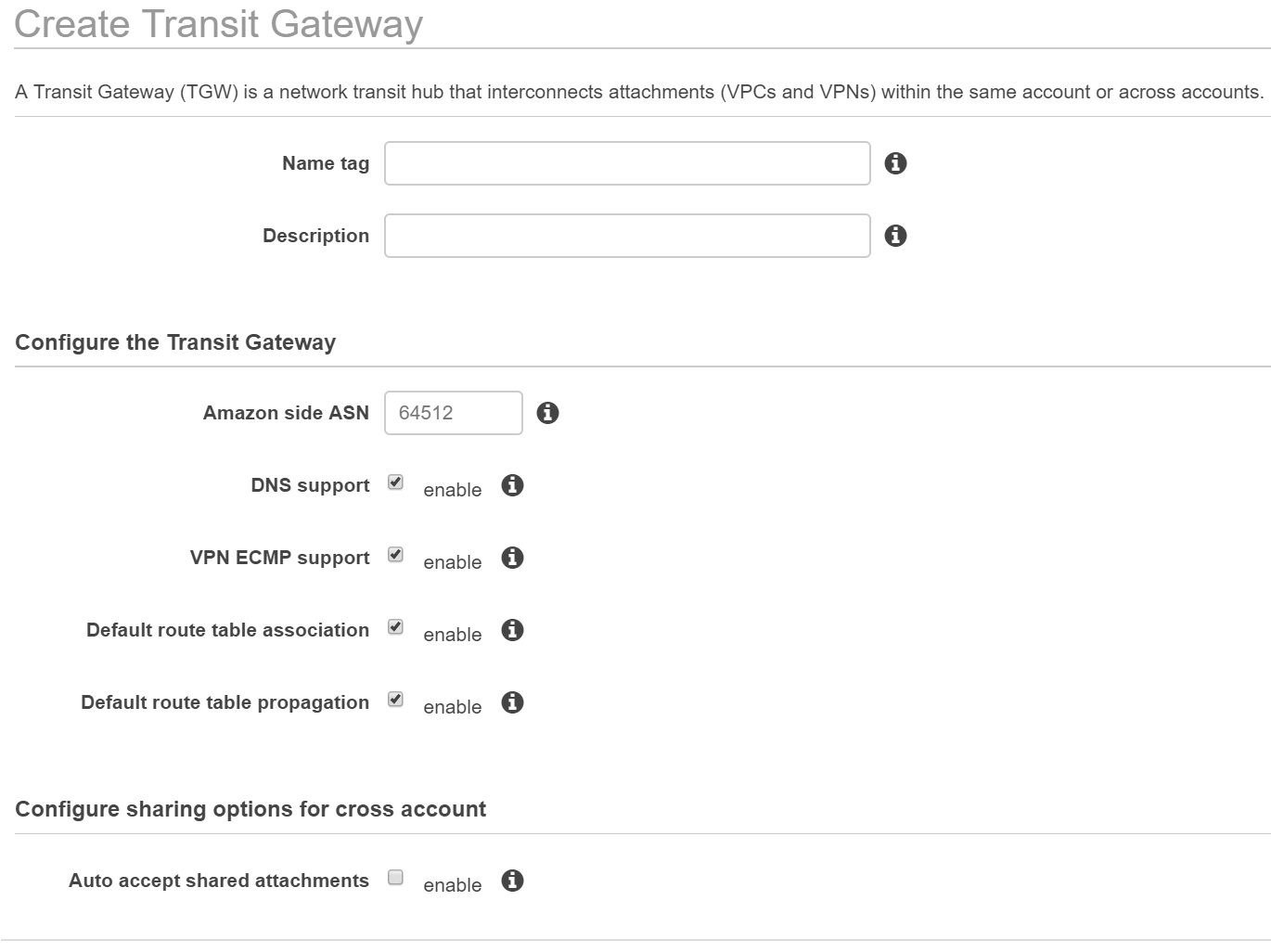

Configuration

To setup a TGW, log into your AWS account, and go to VPC. When you click "Create Transit Gateway", you'll be greeted with this screen:

There are a couple options that weren't immediately clear to me, so lets go through them all:

- Name tag: Up to you, I use $accountName-$region-tgw

- Description: ^

- Amazon side ASN: Depends on your routing policy, I use a unique private ASN per TGW for routing policy flexibility

- DNS support: Allows private DNS resolution across VPCs, I would keep this checked

- VPN ECMP support: A single VPN connection is limited to 1.25Gbps, so enable ECMP and use multiple VPN connections to aggregate VPN throughput. AWS has tested up to 50Gbps successfully with ECMP, but theoretically there is "no limit".

- Default route table association: Automatically associate attachments with the default route table. For example, when you attach a VPC to the TGW, use the default route table. This depends on how you use route tables, but I would keep this checked.

- Default route table propagation: Automatically propogate routes from attachments into the default route table. This will allow anything connected to the default route table to route to each other (assuming the VPC route table is also configured). Depending on how you use the TGW, you may not want this. If you do not want spoke-to-spoke connectivity, do not check this.

- Auto accept shared attachments: Automatically accept cross-account attachments to your VPC. I would leave this unchecked.

CAUTION: You cannot change the above values later. You would need to create a new TGW.

Once your TGW is created, you need to "attach" it to a VPC or VPN connection. For VPN, this should look familiar, as you use BGP or static routing to connect to a customer gateway. For VPC, things start to deviate from previous transit solutions. First, you must select VPC subnets in every AZ that you plan to use. This provisions an ENI in those subnets that the TGW then uses to route traffic to that AZ. You can only select one subnet per AZ. This does not affect routing to other subnets in the AZ, its just what the TGW uses to route to that AZ. Then, you manually add routes in the route table that point to the TGW target. The VPC route table limits have not changed (50 route default, 100 route max), so it is best practice to use a summary route to the TGW. I would just route the RFC1918 address ranges (10.0.0.0/8, 172.16.0.0/12, 192.168.0.0/16) to the TGW, and then any VPC peering you may do will still work with a more specific route.

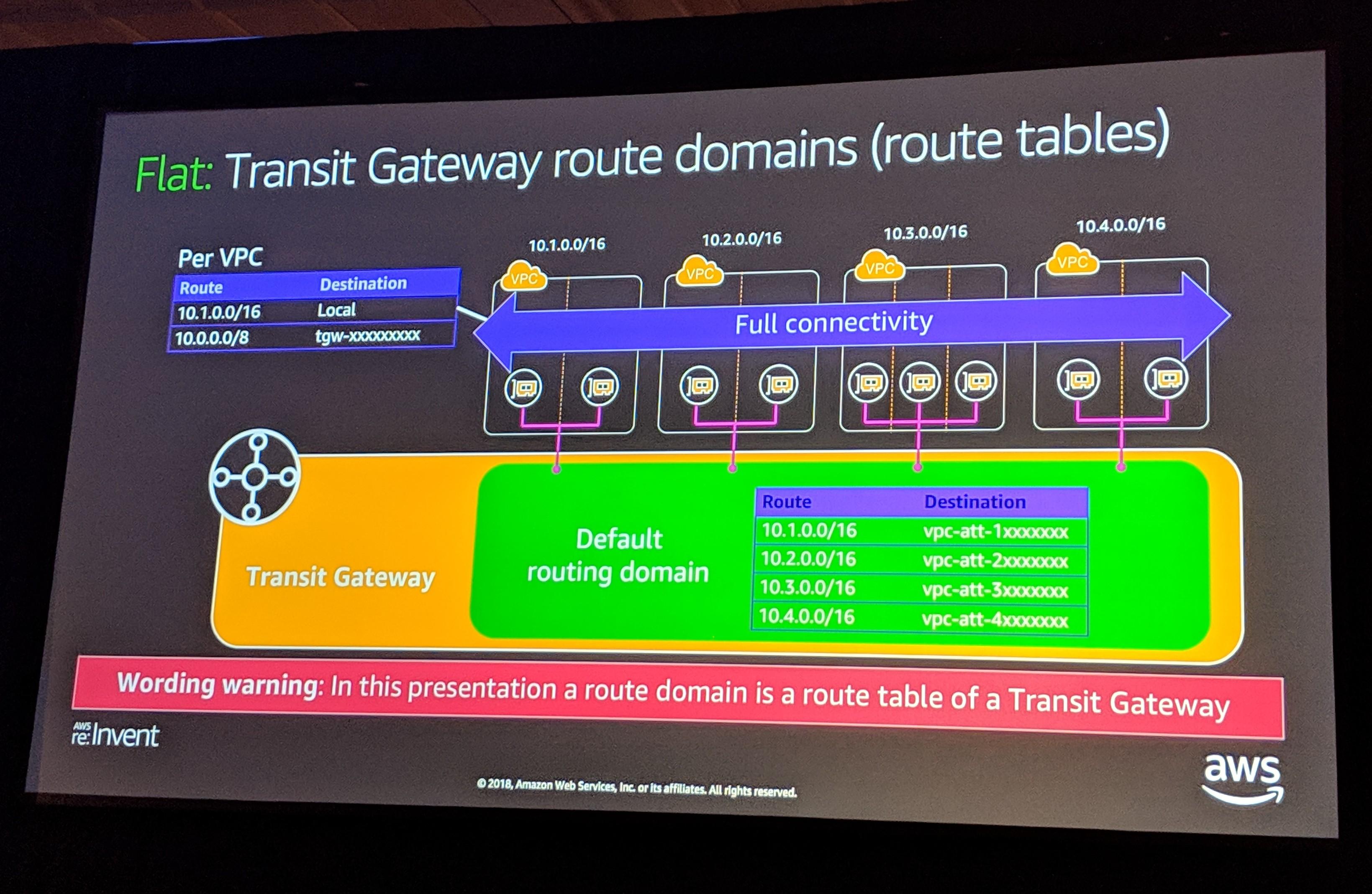

Once the TGW is attached to your VPCs, you then need to associate the attachment with a route table, and propopage routes into the route table. If you left all the default options when creating the TGW, both of these actions are done automatically. This provides flat, any-to-any VPC routing out of the box.

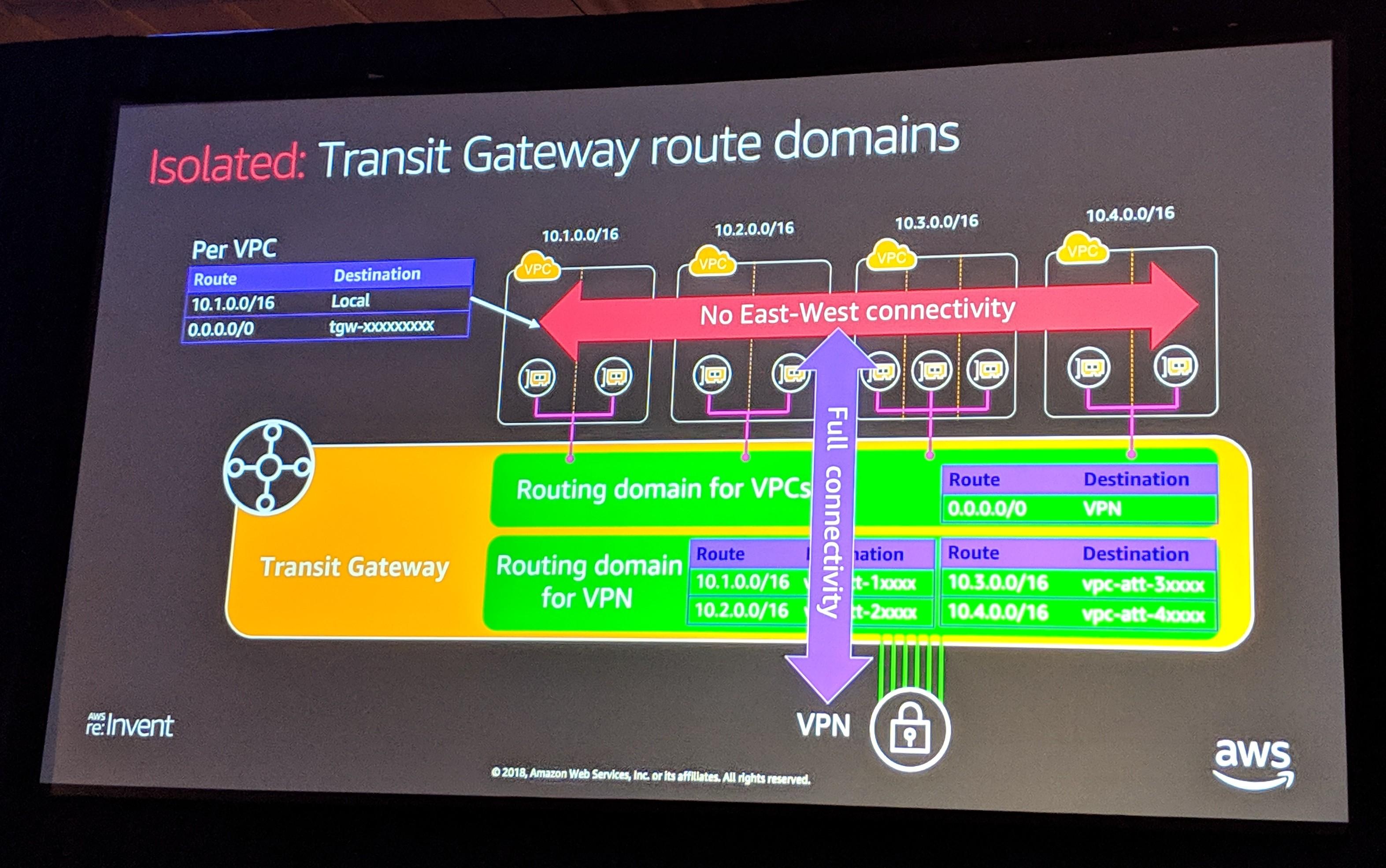

If you are looking for isolated hub-and-spoke routing (prevent spokes from talking), then we need to change our config:

- Create a new TGW route table (VRF) for your hub location, which could be another VPC or VPN

- Associate the hub attachment with the TGW hub route table

- Propagate the spoke routes into the TGW hub route table

- Propagate the hub routes into the TGW default route table, used by the spokes

- If the default route table has all the spoke routes present, delete those. You can always add routes back if needed. This prevents the spokes from routing to each other, while still allowing access to the hub.

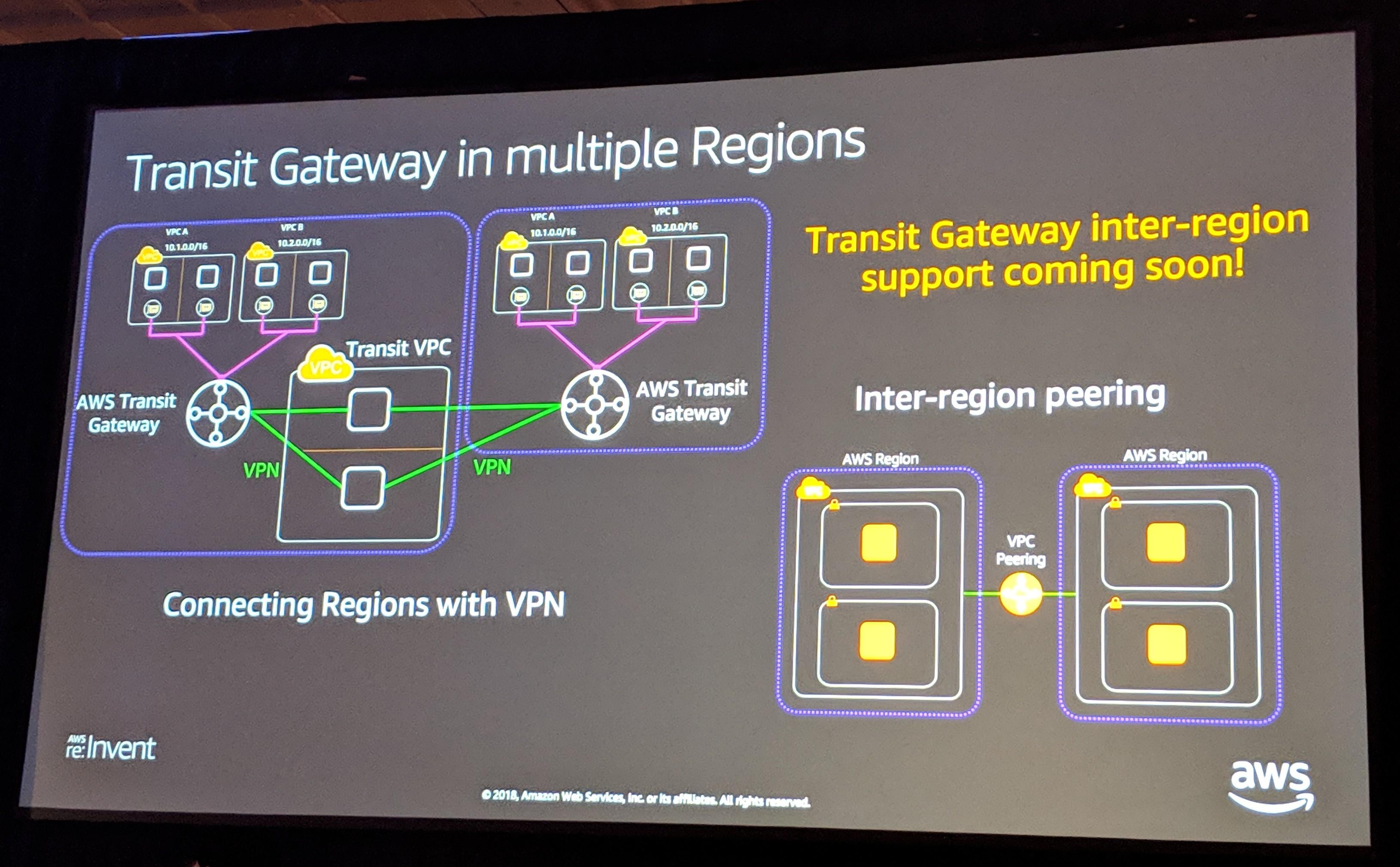

Finally, since TGW is a regional service, how do you connect multiple regions? Right now, this would be done with a transit VPC, or an on-premise router. But if you can wait until early 2019, AWS should have a native solution!

To summarize, the two architectures discussed here were:

- Flat, any-to-any connectivity. Achieved with the default TGW configuration. All associations and propagations go into the default route table.

- Isolated, hub-and-spoke connectivity. Requires another route table for the hub, and changing route propagation so the spokes only see the hubs route. The hub route table should have propagated routes from all the spokes.

AWS Transit Gateway is a great addition to the AWS networking stack, and really provides a extremely scalable, secure solution for routing in the cloud.

Official Docs

Introducing AWS Transit Gateway

AWS Transit Gateway Solution

AWS Transit Gateway Docs